Artificial intelligence has entered a phase where progress is increasingly constrained not by algorithms or compute units, but by memory. While GPUs and AI accelerators dominate headlines, High-Bandwidth Memory, or HBM, has quietly become the most critical and scarce component in the AI hardware stack. By 2025, industry analysts estimate that HBM revenue grew by approximately 65 to 75 percent year over year, far outpacing the broader semiconductor market, which expanded at roughly 13 percent. This imbalance reflects a structural constraint rather than a temporary supply shock.

HBM’s role in modern AI systems is fundamental. Training a large language model with hundreds of billions of parameters requires not only trillions of floating-point operations but also sustained access to vast amounts of data at extremely high bandwidth. As a result, memory throughput, not raw compute, increasingly determines system performance. The global race to scale AI has therefore turned HBM into a strategic resource, with economic, industrial, and geopolitical implications.

What High-Bandwidth Memory Is and Why It Matters Quantitatively

High-Bandwidth Memory is a stacked DRAM technology designed to maximize bandwidth per unit of energy. Unlike DDR5 server memory, which typically delivers 50 to 60 gigabytes per second per channel, a single HBM3 stack can exceed 800 gigabytes per second. A modern AI accelerator integrates between four and eight HBM stacks, producing aggregate bandwidths of 3 to 5 terabytes per second.

This bandwidth is essential for AI workloads. A transformer model with 500 billion parameters stored in 16-bit precision requires approximately one terabyte of memory just to hold weights, excluding activations and optimizer states. During training, parameters are accessed repeatedly across thousands of iterations, making memory bandwidth a dominant factor in throughput. Even modest stalls translate into significant efficiency losses when systems operate at power envelopes exceeding 700 watts per accelerator.

Energy efficiency further amplifies HBM’s importance. Data movement can account for 30 to 50 percent of total energy consumption in AI training workloads. HBM reduces energy per bit transferred by a factor of three to five compared with off-package memory. In data centers where power availability and cooling costs increasingly cap expansion, this efficiency difference is decisive.

Why GPUs Alone No Longer Define AI Capacity

GPU production capacity has expanded rapidly. Leading-edge foundries increased advanced-node wafer starts by roughly 20 percent between 2023 and 2025. However, GPU availability does not translate directly into deployable AI capacity. Each high-end accelerator requires multiple HBM stacks, and without them, the GPU cannot operate at its designed performance envelope.

In 2024 and 2025, several hyperscalers reported internal utilization gaps where accelerators were delivered on time but could not be fully commissioned due to delayed HBM shipments. In practical terms, an accelerator without HBM is a sunk cost asset. This has shifted procurement dynamics. Memory availability now dictates deployment schedules, not compute silicon.

In cost terms, HBM has also grown in importance. For top-tier AI accelerators, memory and advanced packaging together can represent 35 to 45 percent of total bill-of-materials cost. Five years ago, this figure was closer to 20 percent. This shift has profound implications for pricing, margins, and system-level economics.

The Concentrated and Capacity-Limited HBM Supply Chain

HBM production is one of the most concentrated segments of the semiconductor industry. As of 2025, three companies account for nearly 100 percent of global supply. SK hynix holds an estimated 50 to 55 percent market share, Samsung approximately 35 to 40 percent, and Micron the remainder.

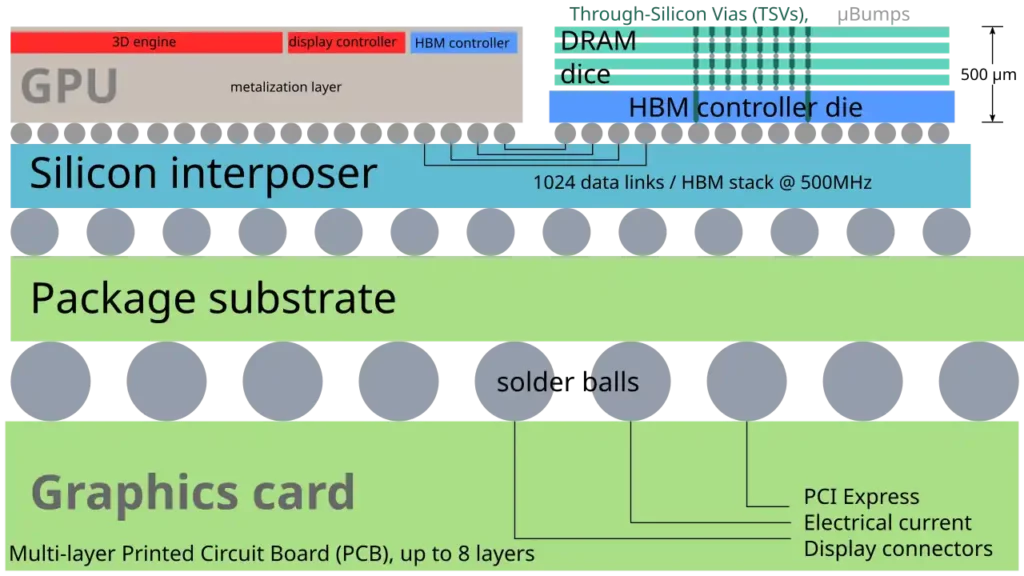

This concentration reflects the complexity of HBM manufacturing. Producing a single HBM stack requires eight to twelve DRAM dies, each fabricated at advanced nodes, thinned to less than 50 micrometers, and vertically interconnected using thousands of through-silicon vias. Yield losses compound across each layer. A defect rate of just 1 percent per die can translate into double-digit yield losses at the stack level.

Advanced packaging further constrains output. Silicon interposers and chip-on-wafer-on-substrate assembly require specialized facilities. In 2024, total global advanced packaging capacity suitable for HBM was estimated to be sufficient for fewer than 20 million HBM stacks annually. Demand projections for AI accelerators alone exceeded this figure by 2026, even before accounting for networking and other high-performance computing applications.

Economics of the HBM Boom

The financial impact of the HBM shortage is visible across the semiconductor value chain. Average selling prices for HBM3 products increased by an estimated 20 to 30 percent between 2023 and 2025, even as traditional DRAM prices experienced volatility. Gross margins for HBM lines are estimated to exceed 50 percent for leading suppliers, compared with 25 to 30 percent for commodity DRAM.

Capital expenditure patterns reflect this shift. Memory manufacturers are allocating a growing share of investment to HBM-capable lines and advanced packaging integration. However, new capacity typically requires two to three years from investment decision to meaningful output, reinforcing near-term scarcity.

For buyers, long-term supply agreements have become the norm. Hyperscalers now commit billions of dollars in prepayments and multi-year contracts to secure priority access. In effect, HBM capacity is being reserved years in advance, limiting spot market availability and raising barriers for smaller players.

Semiconductor Nationalism and Strategic Memory Capacity

As HBM’s importance has grown, governments have begun to treat memory manufacturing as a strategic asset. South Korea has publicly identified advanced memory as a national priority, supporting domestic investment through tax incentives and infrastructure programs. The United States has included memory and advanced packaging within broader semiconductor industrial policies aimed at reducing supply chain vulnerability.

Export controls have further increased the strategic value of HBM. Restrictions on advanced AI hardware indirectly elevate the importance of memory components that enable high-performance systems. Some governments are now evaluating domestic stockpiles of critical semiconductor components, including memory, for national security and economic resilience.

This shift mirrors historical patterns in energy markets. Just as oil reserves once defined industrial power, access to advanced memory increasingly shapes AI competitiveness. The analogy is not rhetorical. Without HBM, even the most advanced processors cannot deliver meaningful AI capability.

Technical Barriers That Prevent Rapid Scaling

HBM supply cannot expand quickly due to fundamental engineering constraints. Each new generation increases stack height, bandwidth, and pin counts, raising thermal density and yield risk. Managing heat within tightly stacked dies is one of the most difficult challenges in modern semiconductor design. Even small thermal gradients can degrade reliability and shorten lifespan.

Packaging capacity remains a hard ceiling. Building a new advanced packaging facility requires investments measured in billions of dollars and lead times exceeding 30 months. Unlike wafer fabs, packaging facilities must be tightly integrated with specific process flows, limiting flexibility.

Co-development with processor vendors adds another constraint. Memory interfaces are not standardized in the same way as commodity DRAM. Each new HBM generation requires coordination across multiple companies, extending development cycles and reducing responsiveness to demand shocks.

Impact on AI Model Development and Industry Structure

The HBM shortage is already shaping AI research. Developers are increasingly optimizing models to reduce memory footprint. Techniques such as parameter sharing, sparsity, and lower-precision formats are being adopted not primarily for efficiency, but out of necessity.

At the industry level, memory scarcity favors large incumbents. Only organizations with the capital to secure long-term supply contracts can reliably train frontier-scale models. This dynamic risks concentrating AI capability among a small number of players, potentially slowing broader innovation.

Smaller firms and academic institutions face increasing difficulty accessing the hardware required for cutting-edge research. This imbalance could have long-term implications for the diversity and direction of AI development.

Possible Mitigations and the Limits of Alternatives

Several mitigation strategies are under exploration. These include expanding advanced packaging capacity, improving yields, and supplementing HBM with emerging memory expansion technologies. However, none offer a near-term substitute for HBM’s combination of bandwidth, latency, and efficiency.

Alternative memory technologies remain years away from commercial maturity at scale. In the meantime, incremental capacity increases and efficiency gains will likely be absorbed immediately by rising AI demand.

Outlook

High-Bandwidth Memory has emerged as a defining constraint of the AI era. Its scarcity is not a temporary imbalance but a structural feature of the current technology stack. With demand growing faster than production capacity and geopolitical factors further complicating supply chains, HBM will remain a central factor shaping AI economics and strategy for the rest of the decade.

Understanding the HBM crunch is therefore essential to understanding the future of artificial intelligence itself. In the race for AI leadership, memory has become as decisive as compute, and in many cases, more so.

References and Sources

Micron Technology projects HBM total addressable market over $35 billion in 2025 and reports strong demand and revenue growth for HBM3E. https://investors.micron.com/static-files/52ce0c07-49dc-4eab-b1d2-a67bde373bca

Research shows HBM capacity expansion, 3D stacking trends, and projected production growth with tight supply–demand dynamics. https://www.htfmarketintelligence.com/press-release/global-high-bandwidth-memory-hbm-market

An industry report projects HBM market growth from about $2.9 billion in 2024 to $15.67 billion by 2032 at a CAGR of ~24 percent. https://www.prnewswire.com/news-releases/investor-insight-hbm-market-poised-for-26-10-cagr-reaching-usd-22-57-billion-by-2034–datam-intelligence-302555114.html

TrendForce and market trackers report SK hynix with a dominant share of global HBM supply for 2025, with capacity largely sold out into 2026. https://www.anandtech.com/show/21382/sk-hynixs-hbm-memory-supply-sold-out-through-2025

SK hynix reported high growth in revenue and operating profit in 2025 largely driven by HBM demand, with capacity booked into 2026. https://www.ft.com/content/64e7dfb0-b32c-417e-b411-efc9098e1e3a

A semiconductor industry report projects HBM revenues growing from about $16 billion toward more than $100 billion by 2030 due to AI infrastructure expansion. https://www.tomshardware.com/tech-industry/semiconductors/semiconductor-industry-enters-giga-cycle-as-ai-infrastructure-spending-reshapes-demand

Micron plans a $9.6 billion HBM manufacturing facility to help address memory supply constraints for AI hardware. https://www.tomshardware.com/tech-industry/semiconductors/micron-plans-hbm-fab-in-japan-as-ai-memory-race-accelerates

Reports describe how memory makers are prioritizing HBM and AI memory, driving DRAM and RAM price shifts as consumer segments face shortages. https://www.theverge.com/report/839506/ram-shortage-price-increases-pc-gaming-smartphones