Memory, Money, and Talent: How SK Hynix Is Locking in Engineers Amid a Chip Supercycle

2026 Memory Chip Price Surge: Why DRAM and NAND Costs Are Rising So Fast

In early 2026, memory chip prices surged dramatically, with DRAM rising up to 95% and NAND Flash up to 60% compared with late 2025. Driven by soaring AI workloads and limited supply capacity, these price increases are reshaping costs for cloud providers, technology manufacturers, and consumers alike.

Do Birds Talk? Evidence from a 20-Year Study of Avian Communication

For decades, human language has been seen as a defining feature separating us from other animals. Recent research on Japanese tits, small songbirds of East Asia, challenges this view in surprising ways. Over twenty years of careful observation reveal that these birds combine calls in specific sequences, and that the order of these calls affects how other birds respond.

Papermoon: The Space-Grade Linux for the NewSpace Era

San Francisco Power Outage December 2025: Causes, Impacts, and Restoration Timeline

On December 20, 2025, San Francisco faced a massive power outage that left tens of thousands without electricity for hours. The blackout disrupted transportation, businesses, and daily life across multiple neighborhoods. PG&E crews worked through the night to restore power, while city officials and emergency services coordinated safety measures.

Why European Startups Struggle to Scale

This in-depth analysis explores why European startups continue to face challenges in scaling compared with the United States and China. Drawing on 2025 data, the article examines venture capital trends, unicorn growth, regional disparities, and structural barriers including regulatory complexity, market fragmentation, and talent migration.

The Memory Shortage and the Cost of Technology in 2026

The global memory shortage in 2026 is significantly affecting the technology industry. Rising DRAM and high-bandwidth memory prices are driving higher costs for smartphones, personal computers, servers, and network equipment. Shipment forecasts are slowing, average selling prices are climbing, and manufacturers are adjusting specifications to manage supply constraints.

The Solvent in the Stream: Unmasking the Industrial Origins of the Parkinson’s Pandemic

A major shift is happening in neurology. Scientists are moving beyond genetics to investigate a “silent pandemic” triggered by industrial chemicals. This 4,000-word feature examines how the solvent TCE is linked to the world’s fastest-growing brain disorder and what the 2025 EPA ban means for your health.

Inside the High Court Showdown Between the Metropolitan Police and the Freemasons

A historic legal battle has erupted between the Metropolitan Police and the United Grand Lodge of England over a new mandatory membership disclosure rule. This investigative feature examines the clash between institutional transparency and the right to privacy, exploring the influence of the 1987 Daniel Morgan murder inquiry and the legal precedents of the European Court of Human Rights.

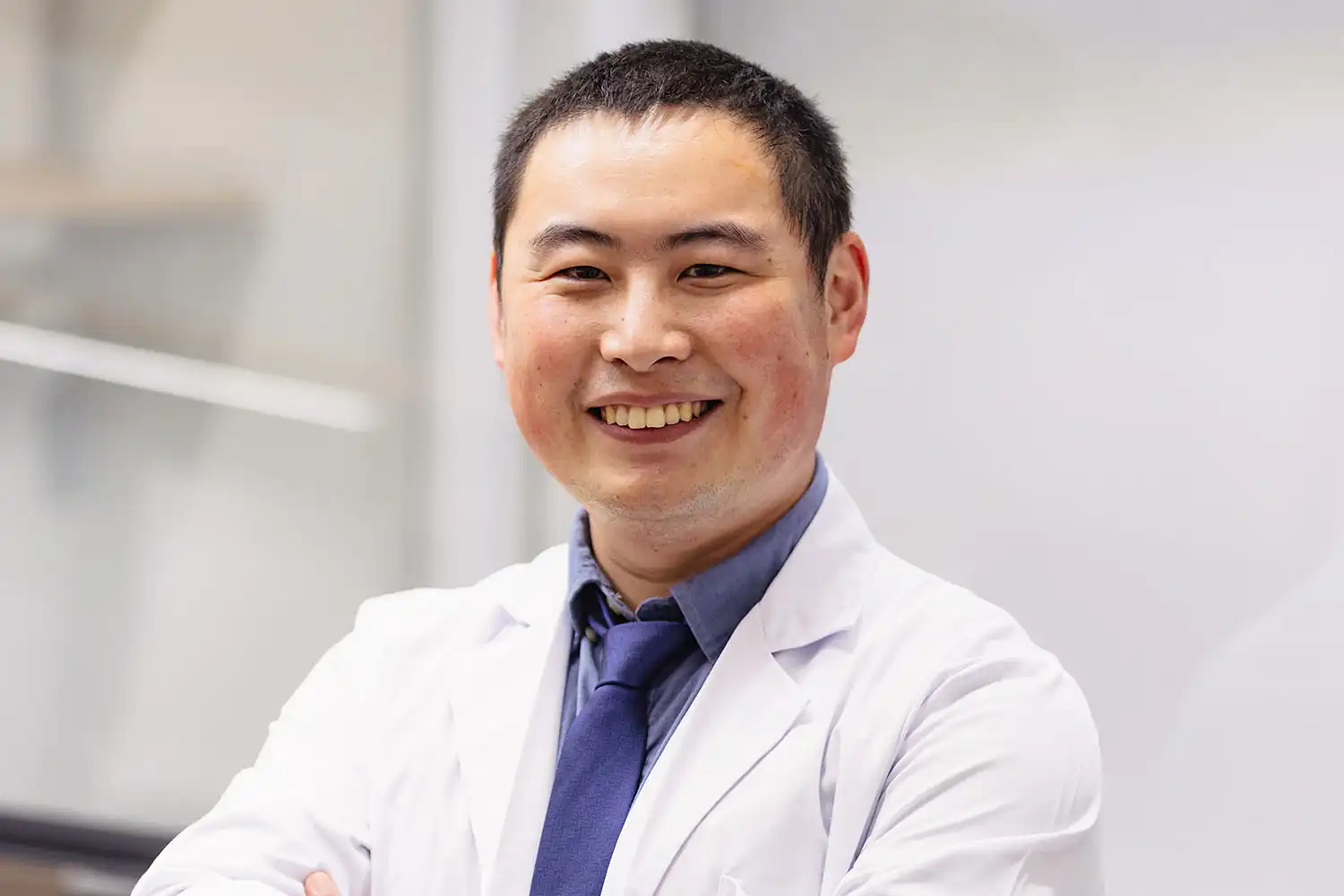

Beyond Chemotherapy: How a Single Dose of Frog Gut Microbes Eliminates Solid Tumors

A breakthrough study from the Japan Advanced Institute of Science and Technology (JAIST) reveals that a single dose of the bacterium Ewingella americana, isolated from the Japanese tree frog, can completely eliminate solid tumors. This deep dive explores how this “living drug” targets hypoxic tumor cores and re-educates the immune system to prevent cancer recurrence.

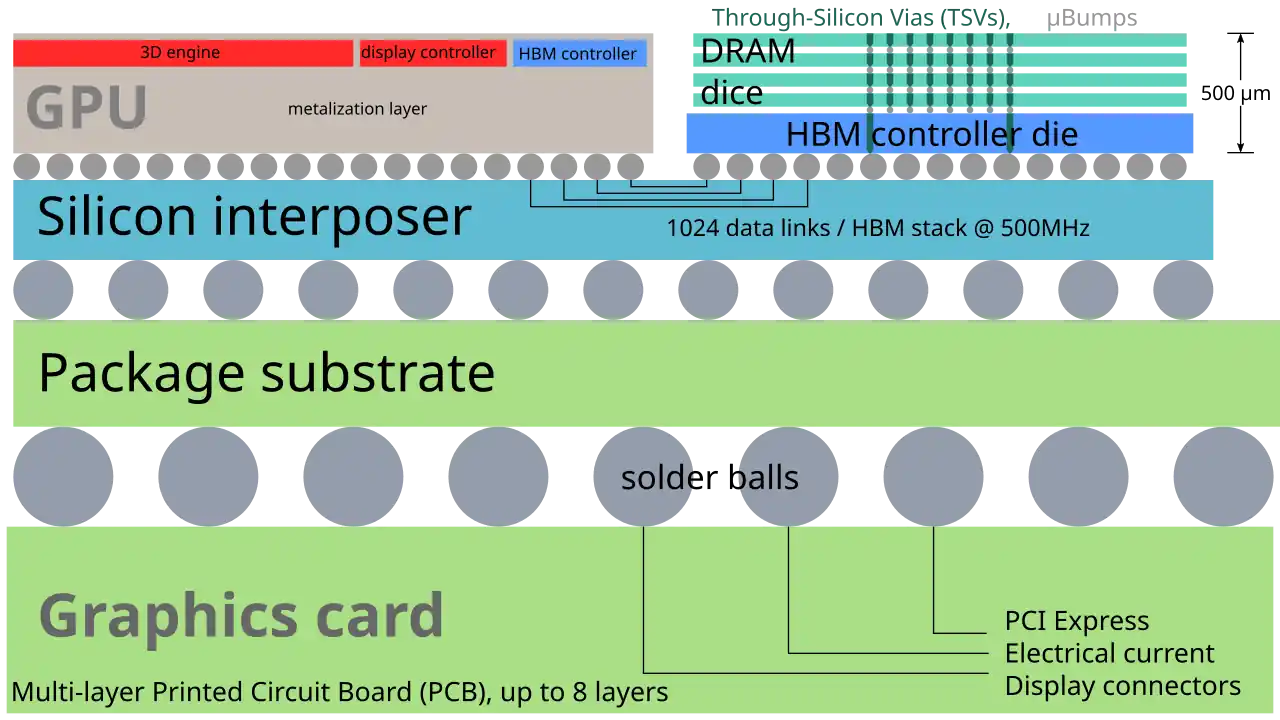

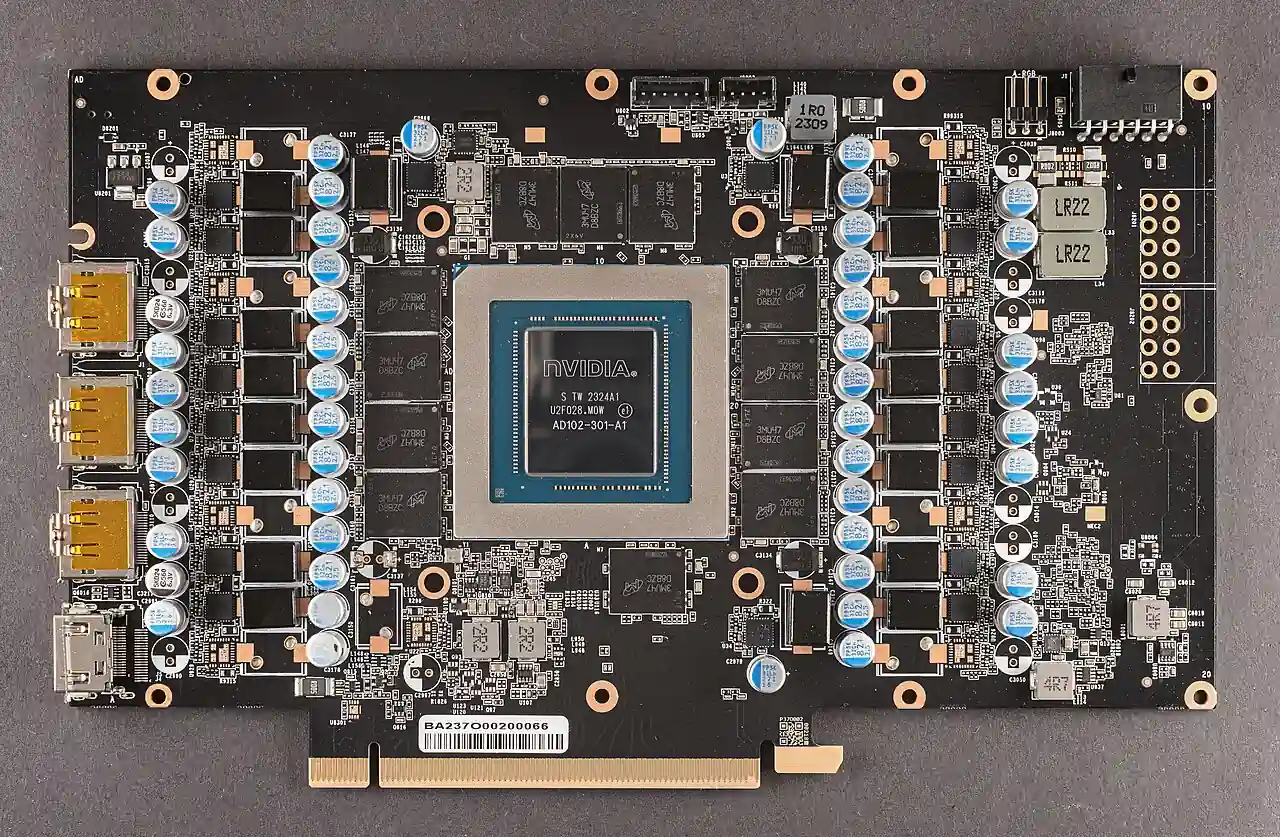

How High-Bandwidth Memory Has Become the Bottleneck of the AI Era

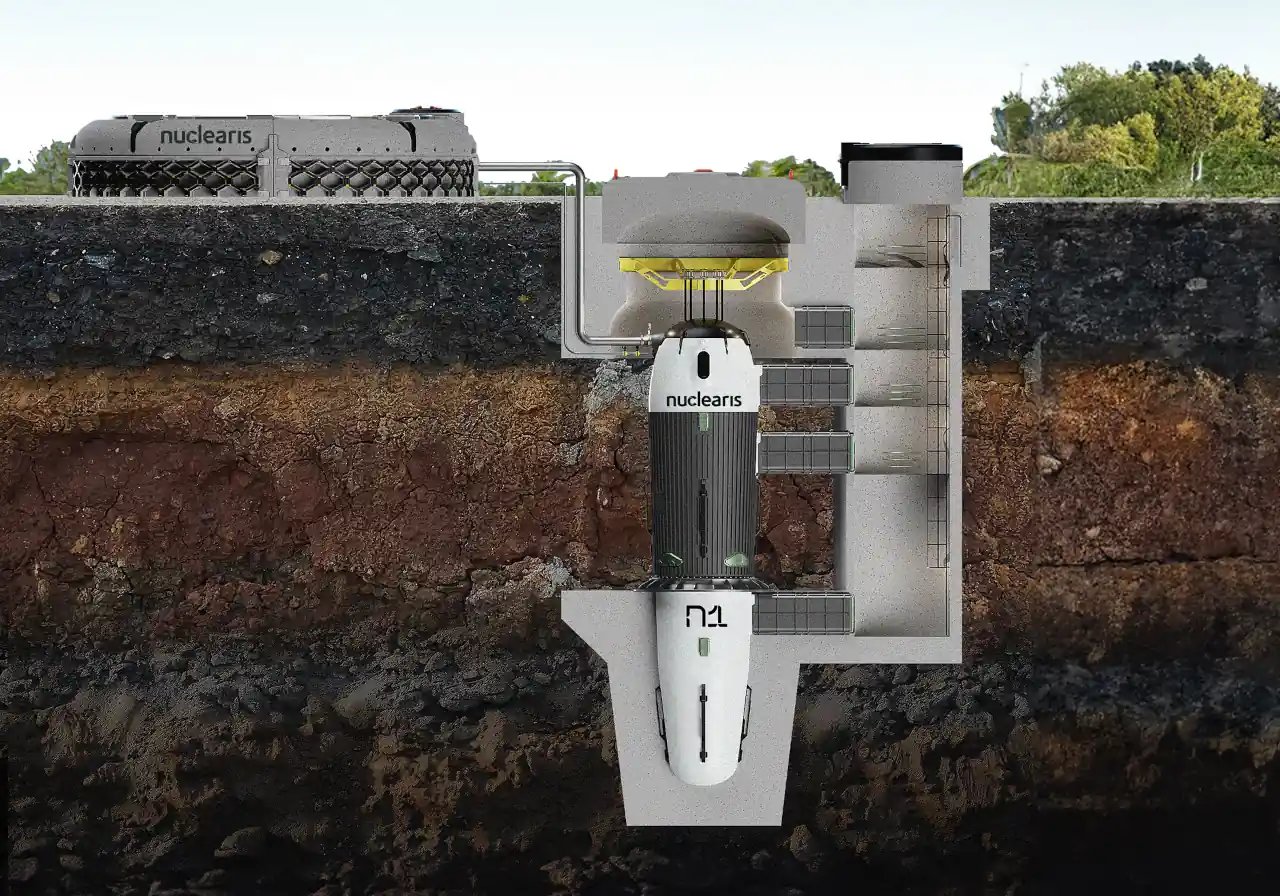

How Micro‑Nuclear and Small Modular Reactors Are Shaping the Future of Data Center Power

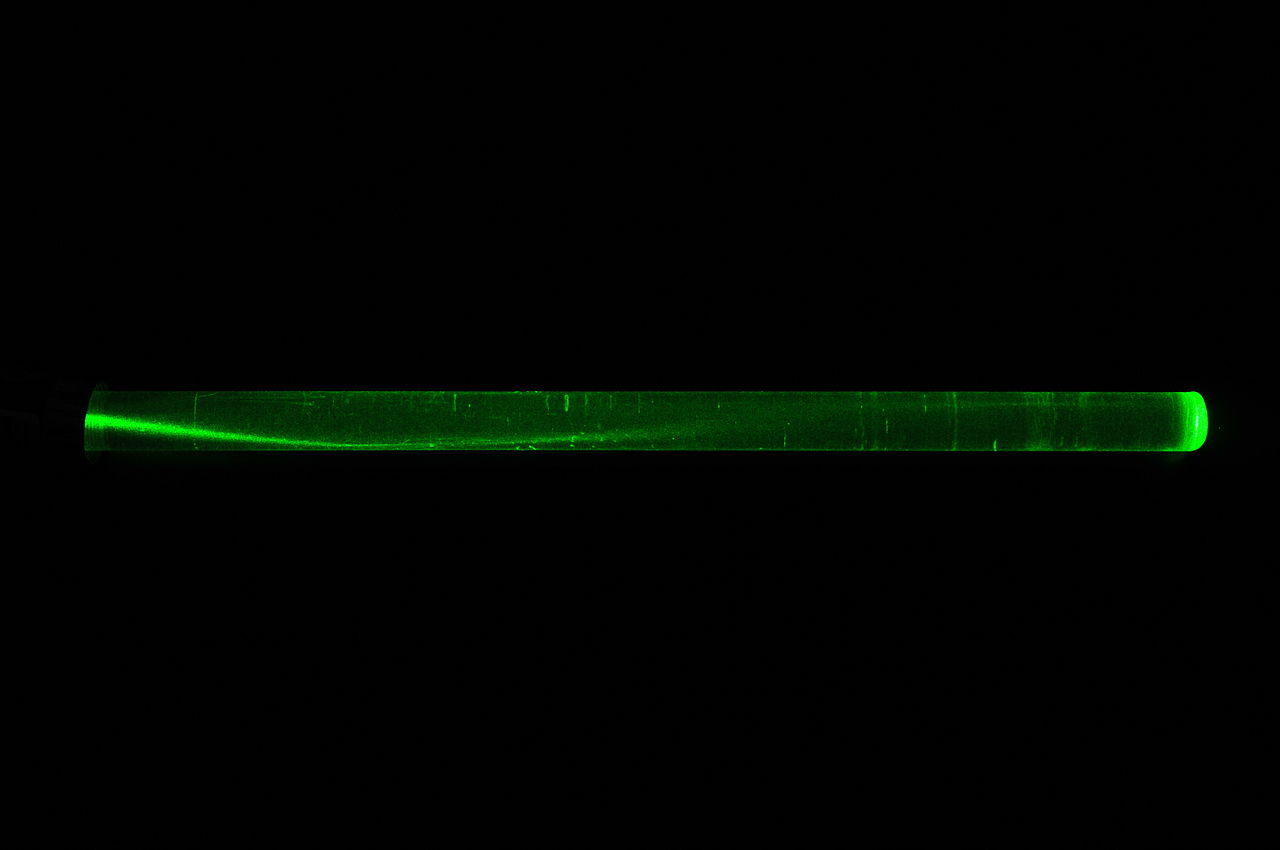

Hollow-Core Fiber: The Breakthrough Technology Accelerating Global Data Networks

Hollow-core optical fiber is poised to transform global telecommunications. By guiding light through air instead of glass, this breakthrough technology reduces latency, increases data transmission speed, and lowers signal loss. Major tech companies like Microsoft are already deploying hollow-core fiber to enhance AI data centers and high-speed networks.

Unveiling the Unseen: A New Era of Transparent Living Organisms

Researchers at Stanford University have developed a groundbreaking method to temporarily make the skin of living mice transparent using a common food dye, tartrazine. This reversible optical clearing allows scientists to observe blood vessels, organs, and cellular processes in real time without invasive procedures.

China’s Record Gold Discovery Explained: Scale, Impact and Global Implications

Digital Archaeology Reveals the Lost Architecture and Urban Life of Pompeii

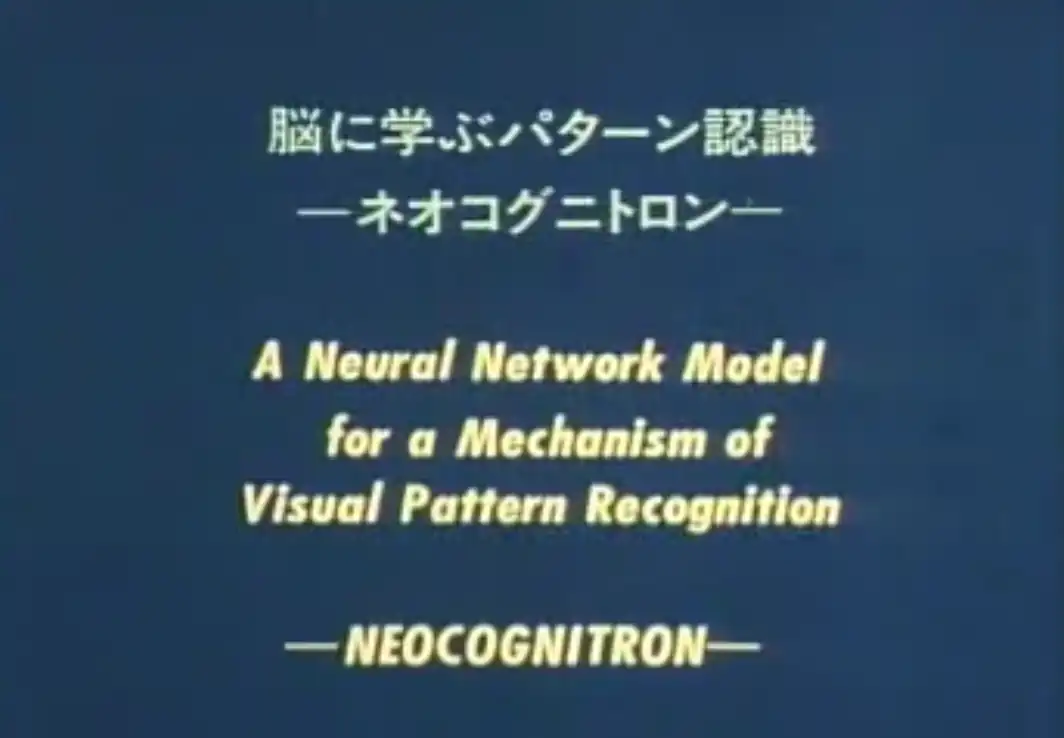

Who Really Invented Convolutional Neural Networks? The History of the Technology That Transformed AI

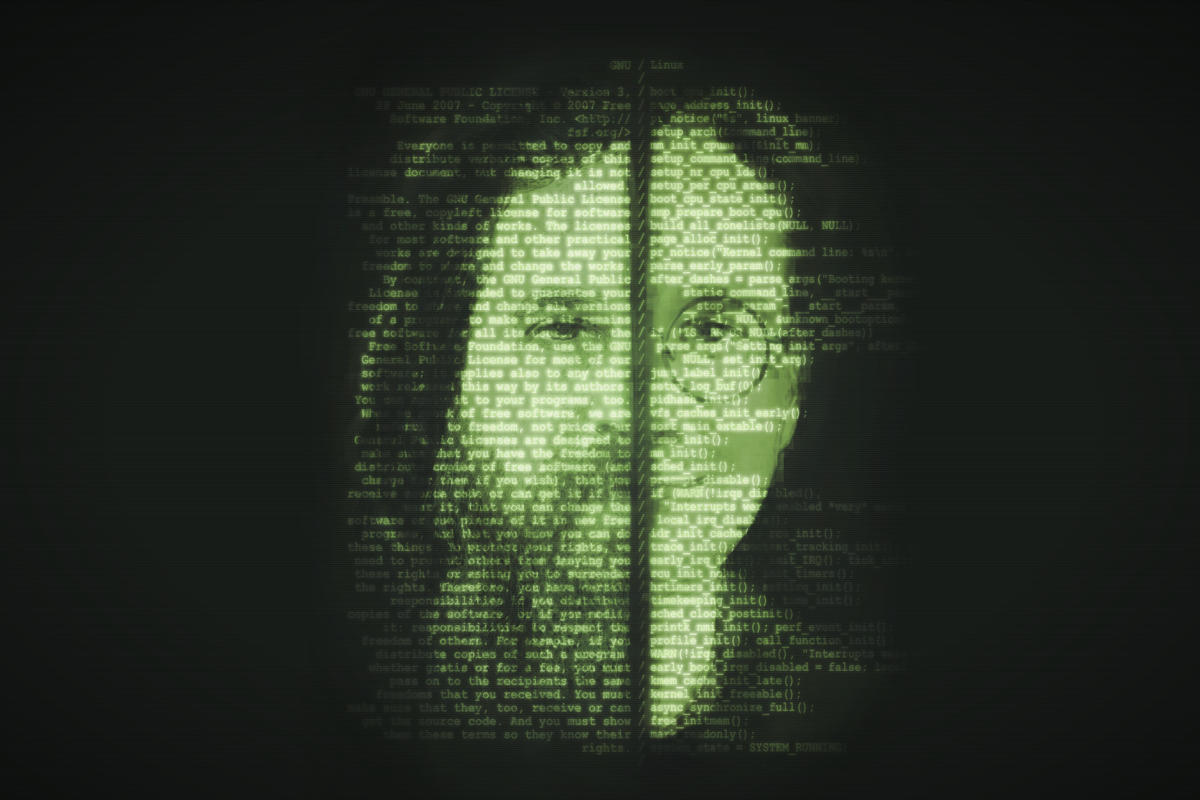

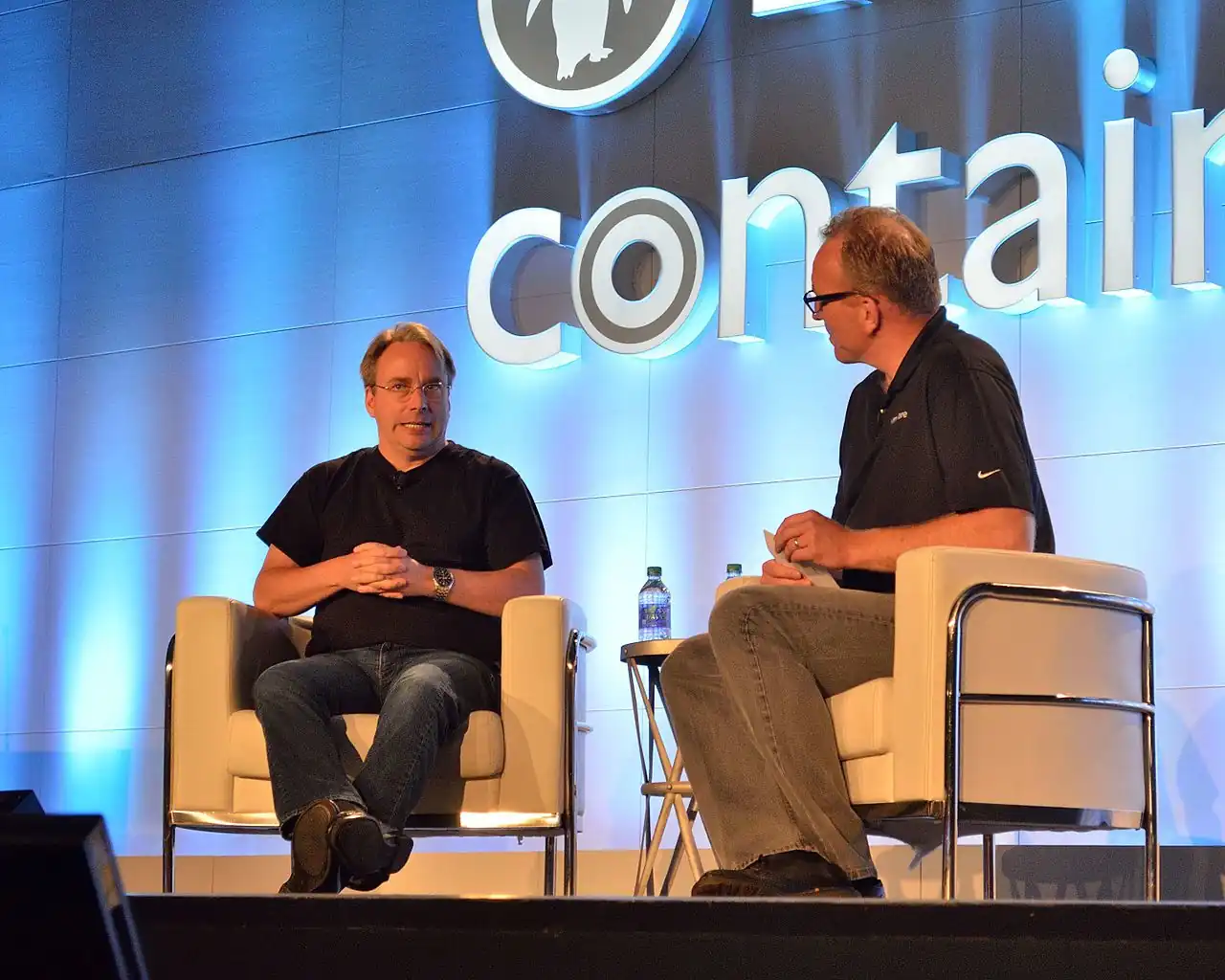

From Supercomputers to Smartphones: How Linux Became the Backbone of Modern Technology

From powering the world’s fastest supercomputers to running the smartphones in our pockets, Linux has quietly become the backbone of modern technology. This article explores how an open-source passion project grew into a global powerhouse; shaping innovation across servers, devices, and entire industries.

Compiler.next and the Dawn of AI‑Native Software Engineering

Compiler.next represents a new era in software engineering, where AI collaborates directly with developers to generate code from high-level intent. Unlike traditional compilers, it uses search-based synthesis, iterative refinement, and dynamic testing to produce reliable programs.

GPU Depreciation Exposed: A Hidden Threat to AI Economics

GPU depreciation is a hidden threat in AI economics, quietly impacting profits, investments, and the cost of AI infrastructure. This article explores the debate over depreciation schedules, hardware lifespan, and economic risk, helping investors and companies understand the financial realities behind AI hardware deployment.

Germany’s State-Funded Content Moderation Network: A Closer Look

The 2025 liber-net report on Germany’s state-funded content moderation network has renewed debate over how online speech is managed in the country. The report maps more than 330 organizations involved in moderation-related work, ranging from academic departments to non-profits and government agencies.

Unmasking a Shadowy Surveillance Empire: How Secret Tech Tracks World Leaders, Journalists, and Individuals

Interstellar Comet 3I/ATLAS: A Comprehensive Assessment

Visa and Mastercard Reach $38 Billion Settlement with U.S. Merchants After Two Decades of Litigation

Visa and Mastercard reached a 38 billion dollar settlement with U.S. merchants to end a two-decade dispute over credit card swipe fees. The deal lowers interchange rates and adds new flexibility for businesses while allowing both companies to deny wrongdoing. If approved, it could reshape how payment fees are managed across the U.S. retail and financial sectors.

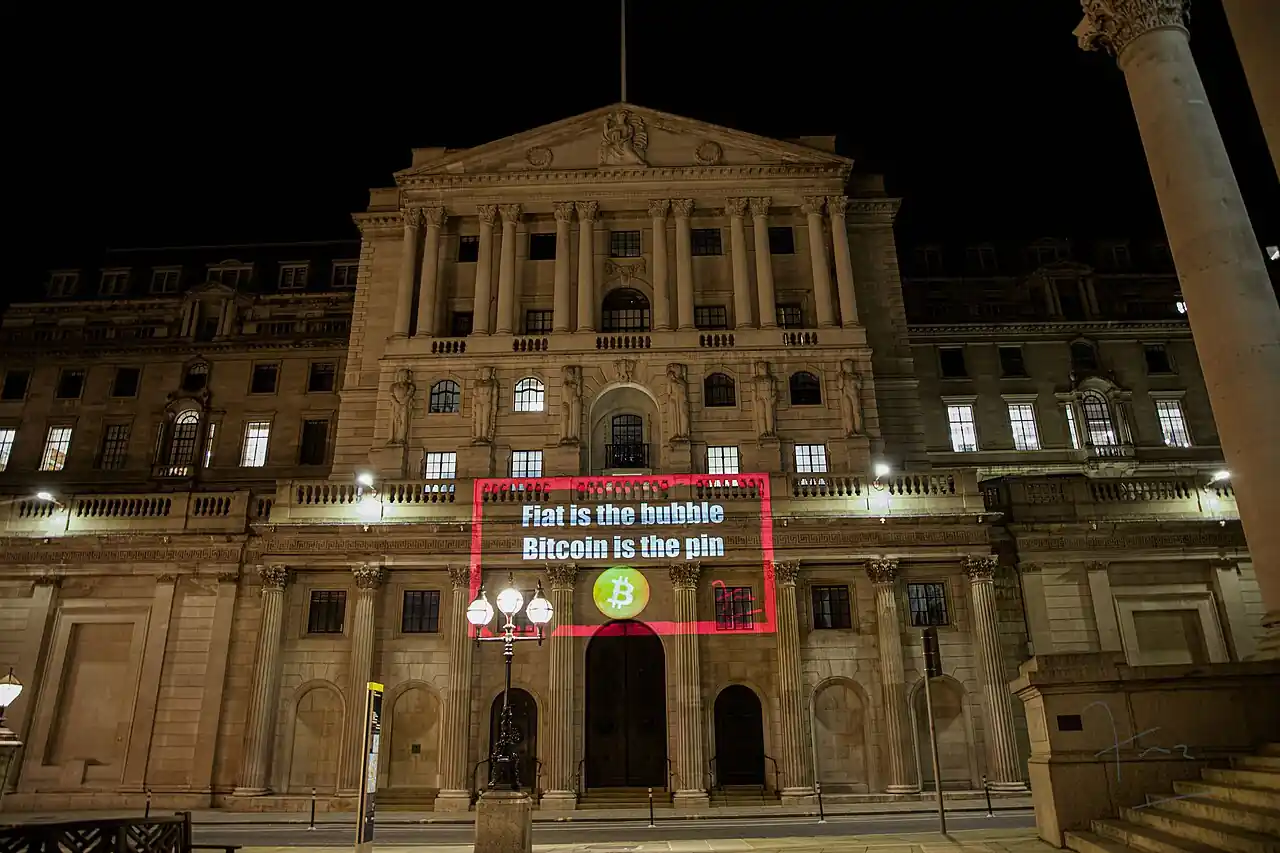

Three Scenarios That Could Shape Bitcoin’s Path to One Million Dollars

Bitcoin has always inspired bold predictions. From early adopters who viewed it as a revolutionary form of money to institutional investors who see it as digital gold, debate over its ultimate value remains fierce. As of now, Bitcoin trades in the low six figures, far from the million-dollar price targets long promised by its most devoted believers.

Steam Just Made Its Game Pages Wider. Here’s Why It Matters

What Past Education Technology Failures Can Teach Us About the Future of AI In Schools

American technologists have long urged schools to rapidly adopt new inventions, many of which failed to deliver lasting benefits. Examples include film strips replacing textbooks, mobile phones in classrooms, and early internet-connected classrooms; none of which reliably improved student learning or outcomes.

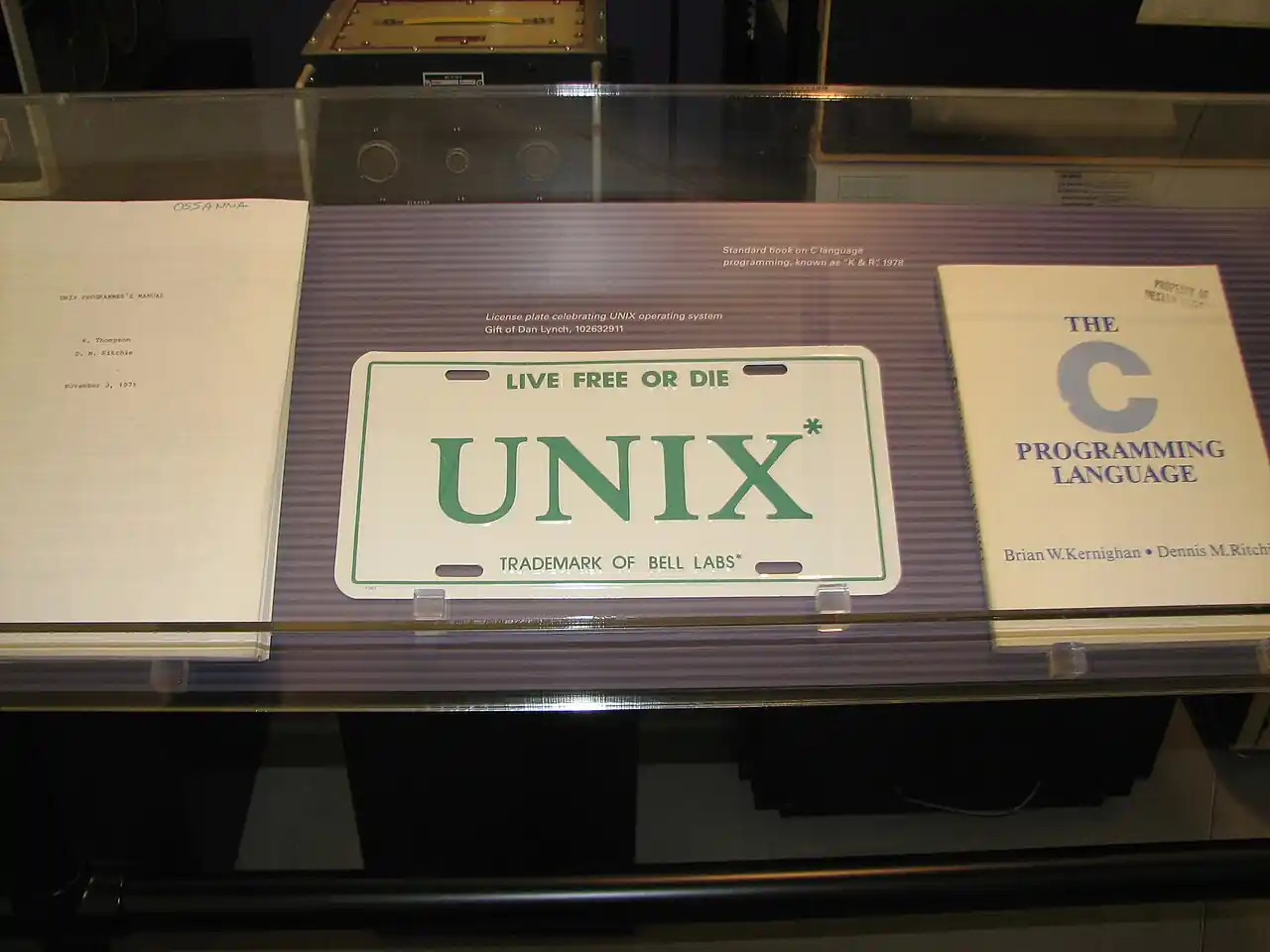

The Lost Tape: Unearthing Unix’s Forgotten Fourth Edition

The Habsburgs’ Hidden Treasure: A Century-Old Secret in Canada

Submerged Time Capsule: Unearthing a Historic Shipwreck in Malaysia

A remarkable shipwreck has been uncovered off Pulau Melaka, Malaysia, revealing a massive wooden vessel estimated to be over 160 feet long. Dating back over a thousand years, the discovery provides new insight into early Southeast Asian maritime history, sophisticated shipbuilding techniques, and regional trade networks that existed long before the founding of the Melaka Sultanate.

Thieves Steal Priceless Jewels in Daylight Heist at the Louvre

The Louvre Museum in Paris was the site of a daring and highly coordinated robbery on Sunday, October 19, 2025, when a group of thieves stole jewellery of immense cultural and historical value. The incident, which occurred in broad daylight, has drawn widespread international attention and prompted immediate investigations by French authorities.

Ireland Makes Basic Income for Artists Permanent Starting 2026

Starting in 2026, Ireland will make its Basic Income for the Arts program permanent, providing 2,000 artists with a weekly stipend of €325. The initiative aims to reduce financial stress, foster creative work, and strengthen the country’s cultural sector by offering stable income support to artists and creative professionals.

Starting November 3, 2025, LinkedIn Will Use User Data by Default to Train AI

LinkedIn will begin using public user data by default to train its artificial intelligence models starting November 3, 2025. This update affects information shared on profiles and posts, with an opt-out option available. The change aims to improve AI-driven features while raising important questions about privacy and consent.

Mojo: Can It Finally Give Python the Speed of Systems Languages?

Mojo is an emerging programming language designed to combine Python’s ease of use with the performance of systems languages like C++. Targeted at AI and high-performance computing, Mojo offers optional static typing, low-level control, and seamless Python interoperability. This article explores Mojo’s design, performance potential, current challenges, and what it could mean for the future of programming.

US Corporate Debt Hits Record Highs Just as AI Frenzy Sparks Bubble Fears

The United States faces a growing financial challenge as corporate debt reaches record highs alongside a surge in AI investments. This article examines how rising borrowing and AI-driven market enthusiasm could combine to create risks for economic stability, exploring data, market trends, and policy responses shaping the future.

When Bins Stop, Libraries Close and Parks Wilt: The Hidden Damage of Council Budget Cuts

After years of shrinking budgets and rising demand, UK councils are being forced to cut back on core services. From less frequent bin collections to reduced social care, the effects are being felt in neighbourhoods across the country. This piece explores what’s changing, why it’s happening, and what it means for the future of local services.

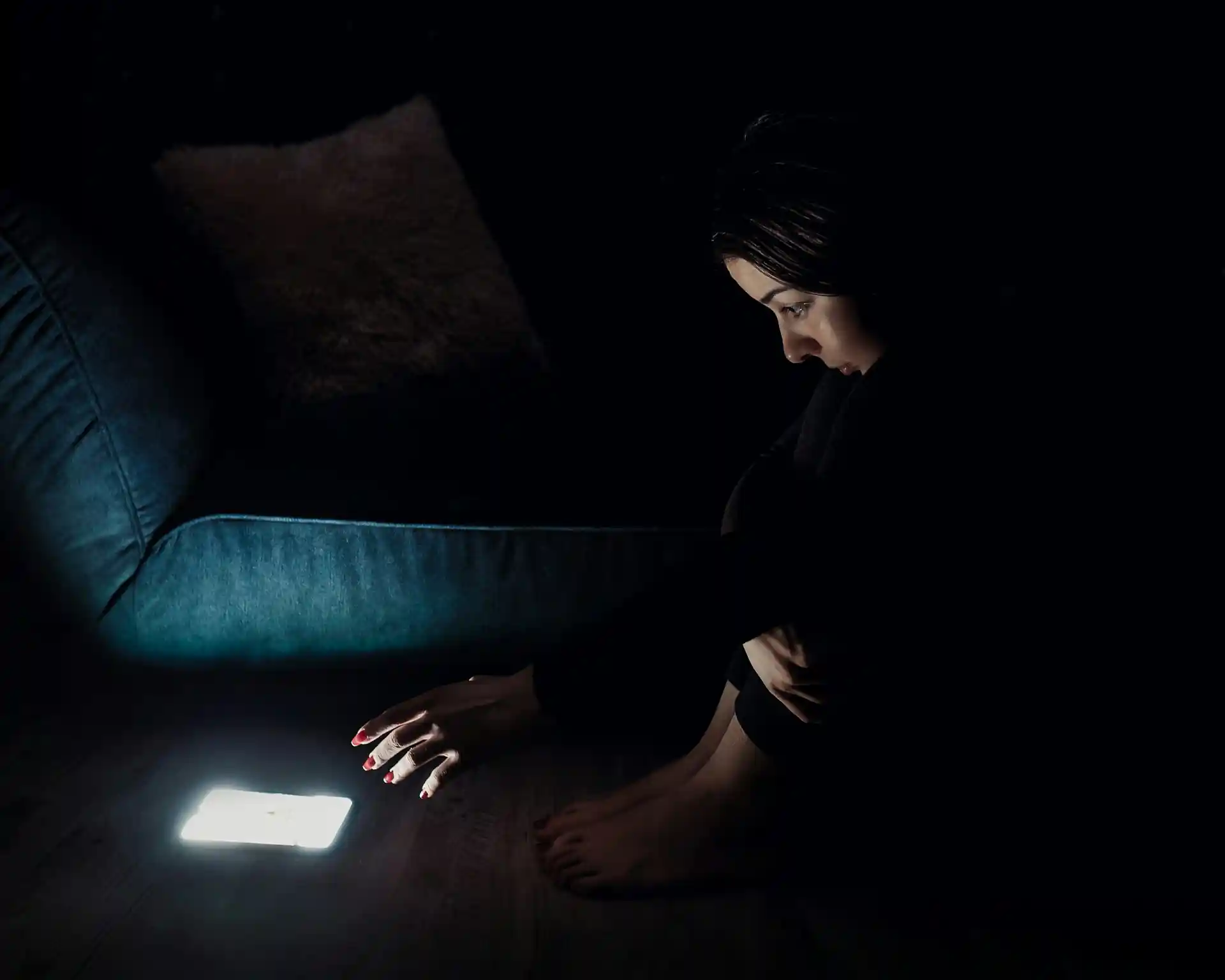

Why Your Brain Loves Doomscrolling: Research Reveals How Social Media Fuels Engagement in Anxious Minds

Why does doomscrolling feel so addictive? A 2024 fMRI study in Computers in Human Behavior reveals that negative social media content triggers dopamine in anxious brains, creating a compelling cycle of engagement. This article explores how algorithms amplify this behavior, the mental health impacts, and practical strategies like mindfulness and feed curation to regain control.

Florida Plans to End All Vaccine Mandates amid Evolving Debate on Safety and Liberty

Florida has officially ended all state vaccine mandates, including those for schools and healthcare workers. This move has sparked a complex debate around public health, individual freedoms, and vaccine safety. The article examines the reasons behind the decision, historical vaccine concerns, and what it could mean for Florida and the nation going forward.

China’s Breakthrough 6G Chip Could Redefine Wireless Communication

4 Tips for a Healthy Heart From A Biokineticist

6G Technology: Emerging Innovations and the Race for Standard Essential Patents

The global rollout of 5G networks is already reshaping telecommunications by delivering faster speeds, lower latency, and connecting countless devices. Yet, as 5G continues to mature, attention is quickly shifting to the next frontier: 6G.

Rust vs. C: A Deep Dive Into Two Systems Programming Giants

Rust and C are two of the most powerful systems programming languages, but they take very different approaches to performance, memory safety, and concurrency. This in-depth comparison explores their strengths, trade-offs, and where each language shines.

Google Strikes $2.4 Billion Deal with Windsurf, Sidestepping OpenAI in AI Talent Race

Google’s $2.4 billion deal with Windsurf secures AI coding tech and leadership after OpenAI’s acquisition failed. Windsurf stays independent, advancing its autonomous coding platform with Google’s backing. The move reshapes the AI developer tools race, giving Google a crucial edge in next-gen software development.

The America Party: Elon Musk’s Bold New Political Venture

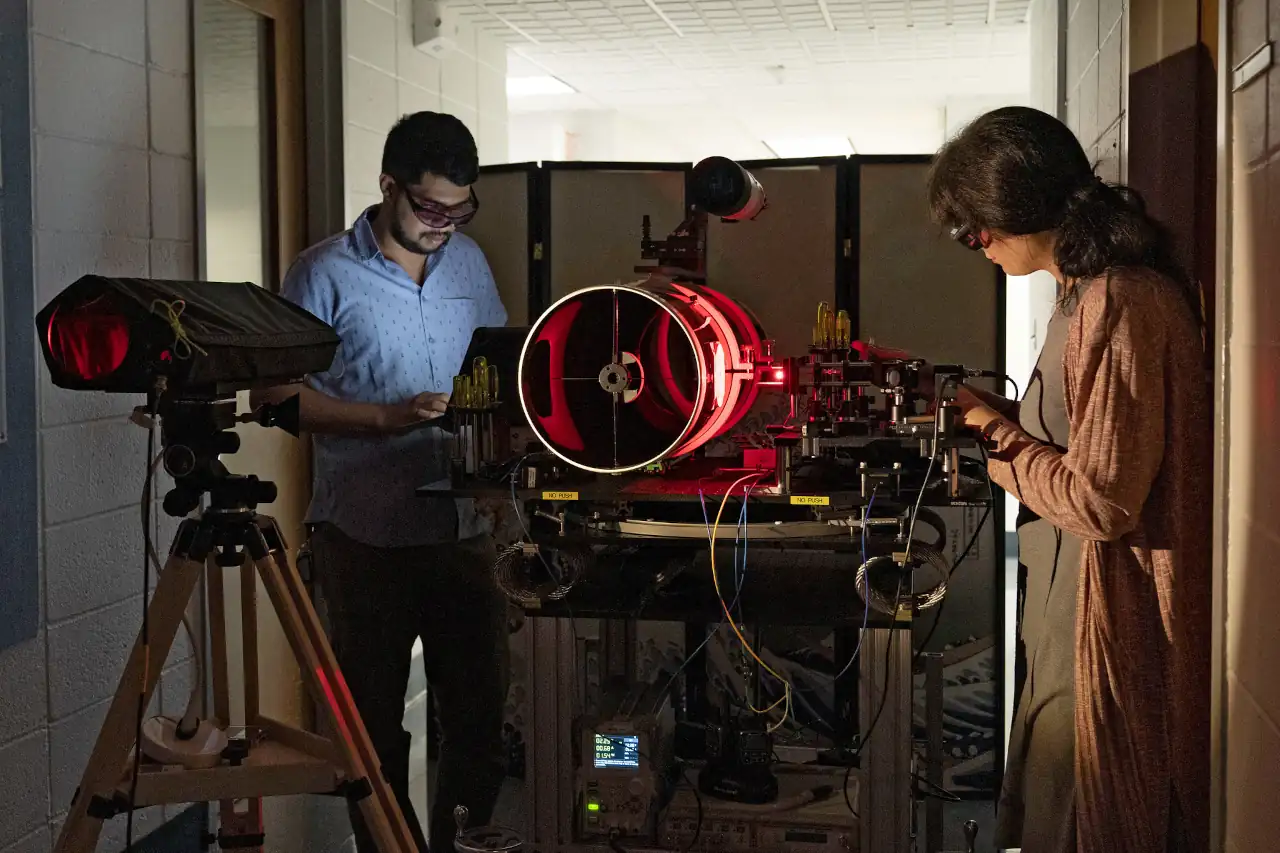

Quantum Entanglement and the Future of Communication Networks

Quantum entanglement, once a puzzling phenomenon in physics, is now at the forefront of a communication revolution. This strange connection between particles could soon enable ultra-secure networks, faster computing, and technologies we’ve only dreamed of. But what exactly is entanglement, and how close are we to turning this quantum mystery into everyday reality?

Neuromorphic Computing: Reimagining Intelligence Beyond Neural Networks

What if machines could process information as efficiently as the human brain? Neuromorphic computing is pioneering this revolution, creating hardware that doesn’t just mimic AI software but replicates the brain’s very architecture. From breakthrough technologies to economic impacts, this emerging field promises to transform the future of artificial intelligence.

China-Iran Rail Corridor: Transforming Eurasian Trade and Geopolitics

Google DeepMind Veo 3: Breakthrough in AI-Generated Cinematic Video with Realistic Audio

Google DeepMind’s Veo 3 is a groundbreaking AI model that generates high-resolution cinematic video with perfectly synchronized, realistic audio. Combining advanced multimodal transformers and large-scale training, Veo 3 offers new possibilities for entertainment, marketing, education, and synthetic media creation.

Best Performing Stocks of the Past Decade (2015–2025)

The Global Semiconductor Supply Chain: Dynamics, Challenges, and Strategic Implications

The Arctic as a New Frontier: Climate, Conflict, and Commerce

An Overview of Essential Certifications for IT Professionals

The Impact of Social Media Addiction on Mental Health

LA County’s 3% Rent Cap: A Balancing Act Between Tenant Relief and Landlord Survival

The Silent Crisis: How America’s Middle Class Is Struggling to Survive

Morocco’s CNSS Cyberattack: A 2025 Breach that Shook a Nation’s Digital Foundations

Global Patterns of Police Misconduct: A Comprehensive Overview

The Surprising Link Between Nature Sounds and Brain Performance

In an age dominated by digital noise and constant distractions, our brains are increasingly overwhelmed by artificial stimuli. From incessant phone notifications to the hum of city traffic, modern environments are filled with sounds that negatively impact our cognitive performance and mental clarity. But what if the antidote to these sounds already exists in the natural world around us?

Monkey Causes Power Outage in Sri Lanka: A Shocking Incident Disrupts the Nation’s Electricity Supply

FDA Recalls Over 2 Million Baked Goods, Including Dunkin’ Doughnuts

The FDA recalls Dunkin’ doughnuts after discovering that certain products may pose a health risk to consumers. While the specific cause behind the recall is still under investigation, food safety recalls like this are typically triggered when there is a risk of contamination or when a product contains allergens that were not properly disclosed on labels.

AI Optimized Carbon Materials: Revolutionizing Strength and Efficiency

Apple Agrees to $95 Million Siri Privacy Settlement: What You Need to Know

Apple has agreed to pay $95 million to settle a class-action lawsuit accusing the company of violating user privacy through its voice-activated assistant, Siri. The lawsuit claimed that Apple secretly recorded private conversations without users’ consent, betraying the company’s long-standing commitment to user privacy.

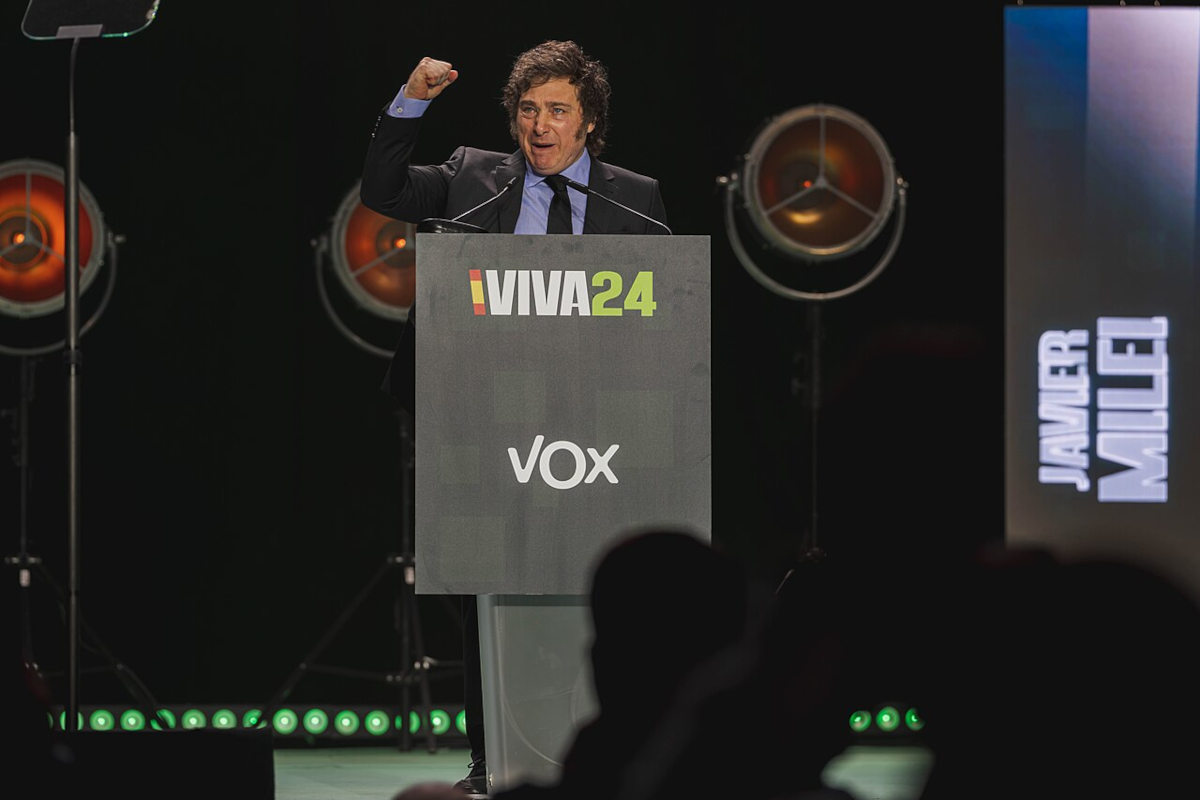

Argentina’s Soaring Poverty Levels Don’t Seem to Be Hurting President Javier Milei – But the Honeymoon Could Be Over

Venezuela Fines TikTok $10 Million for Failing to Control Deadly Viral Challenges

Elon Musk’s X and Google Miss Malaysia’s Social Media Licensing Deadline: What This Means for the Tech Industry

X (formerly Twitter) and Google’s YouTube have failed to meet Malaysia’s January 1, 2025 deadline for obtaining licenses to operate under the country’s new social media regulations. The move comes as Malaysia intensifies efforts to control online content, curb cybercrimes, and ensure that major social media platforms take greater responsibility for the material users share on their services.

Gold Market Performance in 2024 and Prospects for 2025: A Year of Resilience and Future Growth

The year 2024 proved to be an exceptional one for gold, as the yellow metal defied expectations and delivered impressive gains. By the end of the year, its price had surged by nearly 27%, marking one of its best performances of the century. This rally was the largest since 2010, with gold reaching a peak of $2,790 per ounce in late October before settling at around $2,626.80 by December.

Quantum Teleportation Breakthroughs: The Future of Secure Communication via Fiber Optics

The recent breakthrough at Northwestern University represents a critical milestone in the application of quantum teleportation for communication systems. Researchers successfully managed to perform quantum teleportation over a 30-kilometer fiber optic cable that was simultaneously transmitting high-speed internet traffic.

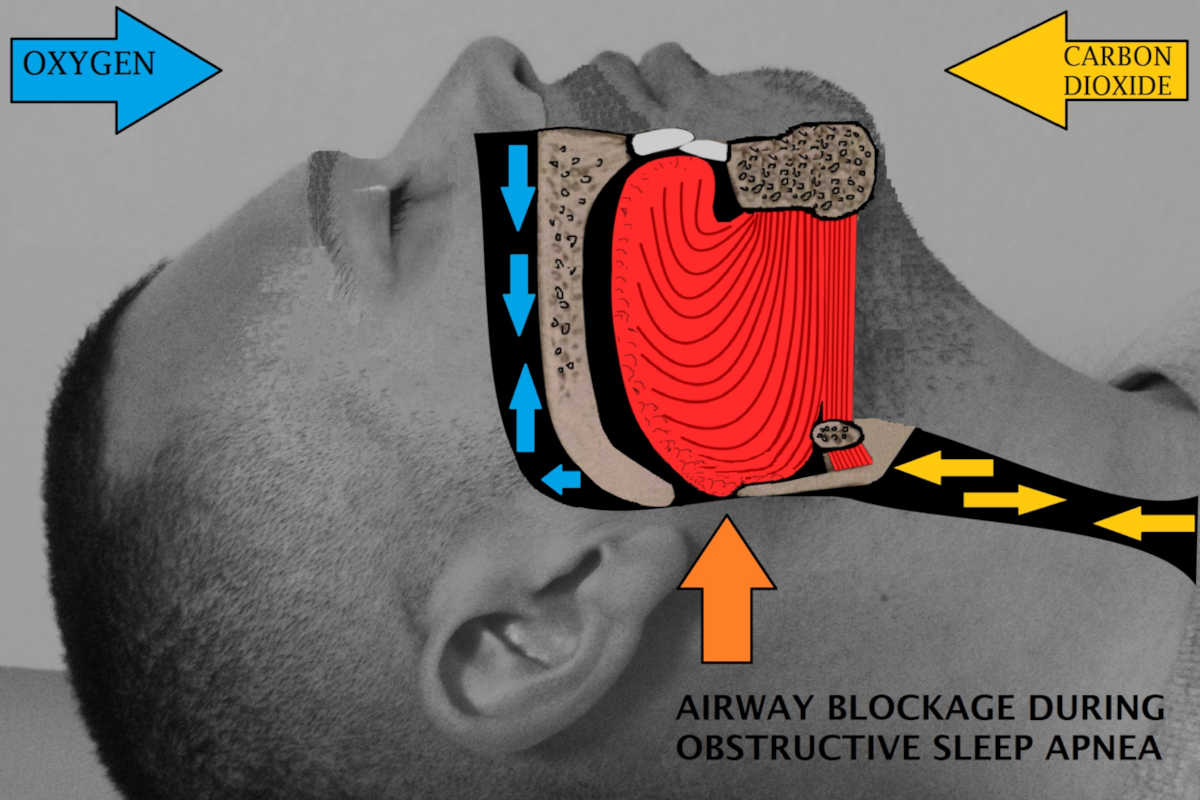

FDA Approves Zepbound® (Tirzepatide) as the First Prescription Treatment for Sleep Apnea in Adults with Obesity

Eli Lilly and Company announced that the U.S. Food and Drug Administration (FDA) has approved Zepbound® (tirzepatide) as the first and only prescription medication for adults with moderate-to-severe obstructive sleep apnea (OSA) and obesity. This groundbreaking approval offers hope to millions of individuals who suffer from these interconnected conditions.

Jury Clears Qualcomm in Legal Dispute with Arm: What the Verdict Means for the Tech Industry

In a landmark legal battle that has captured the attention of the tech industry, a federal jury in Delaware has ruled in favor of Qualcomm in its dispute with Arm Holdings over the 2021 acquisition of Nuvia, a chip startup founded by three former Apple engineers. The jury determined that Qualcomm did not breach its licensing agreement with Arm, a significant victory for the semiconductor giant.

How Linux Dominates Key Industries: From Cloud Computing to Smartphones and Supercomputers

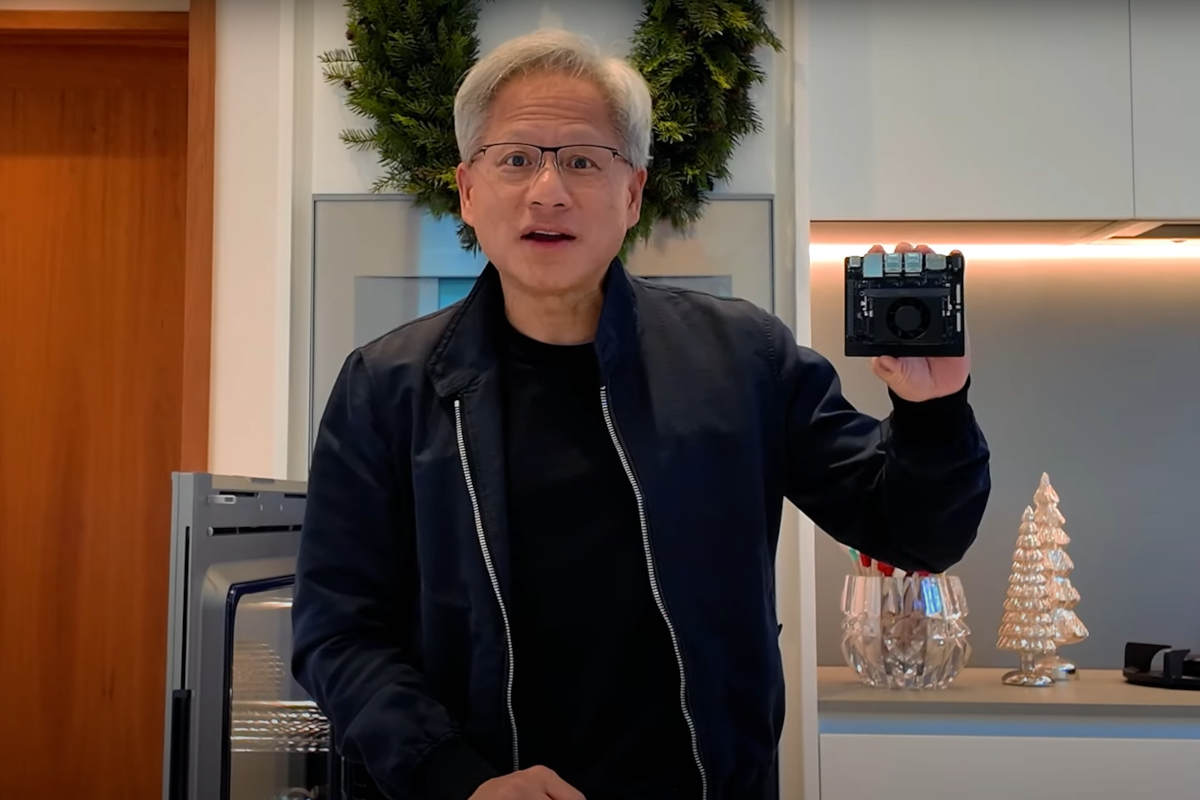

NVIDIA Jetson Orin Nano Super Developer Kit Released: Revolutionizing Generative AI for Edge Devices

NVIDIA has released the Jetson Orin Nano Super Developer Kit, a powerful and affordable AI computing platform designed to redefine the capabilities of small edge devices. At the price of $249, this compact yet high-performance platform opens up new possibilities for developers and students, enabling the seamless integration of generative AI into a wide range of applications.

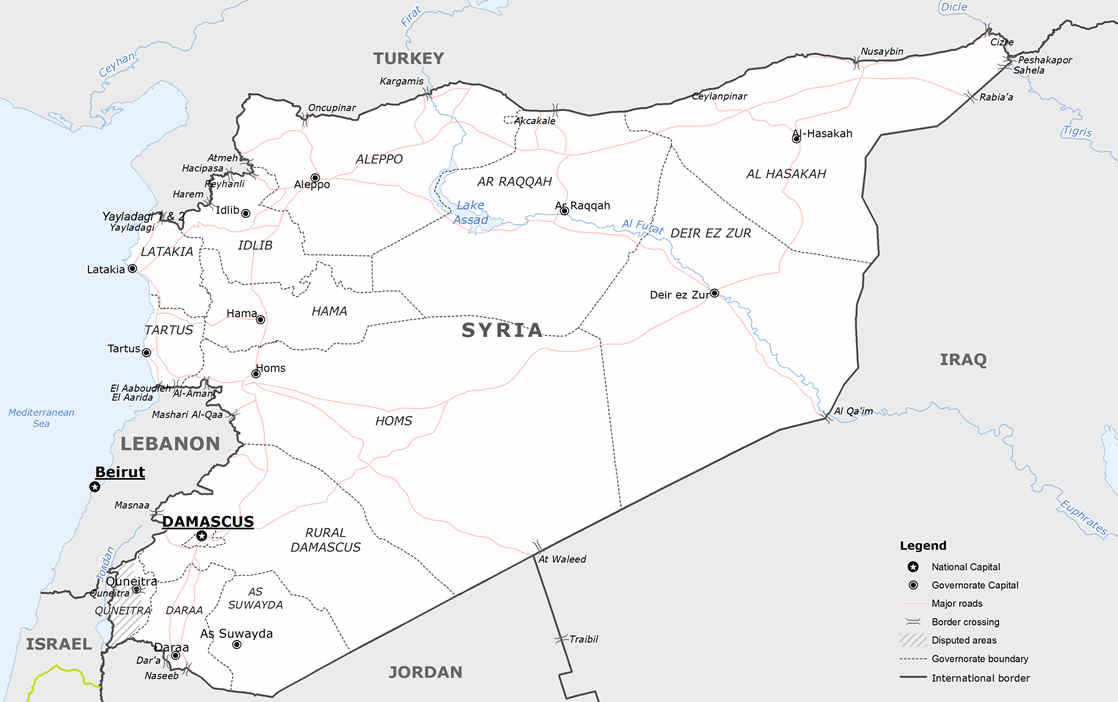

Ancient Alphabet Found in Syria: A New Chapter in Writing History

Qualcomm vs. Arm: The Battle for Chip Licensing and the Future of Semiconductor Innovation

SoftBank $100 Billion U.S. Investment Plan: A New Chapter in Tech and AI Growth

Government Shutdown Risk in 2025: What Could Happen Next?

As 2025 approaches, the possibility of a government shutdown continues to be a looming concern in Washington, D.C. Congressional leaders are under significant pressure to finalize essential legislation before the year ends, and the debate over how to pass a full-year budget has become increasingly urgent.

10 Groundbreaking Archaeological Discoveries of 2024 That Will Rewrite History

2024 has been a year of remarkable archaeological revelations, shedding new light on ancient civilizations that once ruled the earth. From Viking burial sites to the mysterious lives of the Mayans, researchers around the world have made stunning discoveries that rewrite history and challenge our understanding of ancient cultures.

Saudi Arabia Confirmed as 2034 World Cup Host: A Landmark Moment for the Kingdom

On December 11, 2024, Saudi Arabia was officially selected to host the 2034 FIFA World Cup, marking a momentous occasion in the world of football and global sports. This selection positions the Kingdom at the heart of the sporting world, highlighting its growing influence and ambition on the international stage.

Elon Musk’s Journey to $439.2 Billion: A Record-Breaking Milestone

Google Gemini 2.0: A Major Leap in AI Technology

Hundreds of Dangerous Virus Vials Go Missing in Australia: A Biosecurity Crisis

In a shocking turn of events, hundreds of vials containing live and potentially deadly viruses have gone missing from a laboratory in Queensland, Australia. The breach, which was discovered in August 2023, has triggered a full-scale investigation into what is being described as a serious violation of biosecurity protocols.

Why the 4-Day Workweek is Outperforming the 5-Day Grind

The 4-day workweek is rapidly transforming the way companies and countries think about work. As more and more businesses experiment with shorter workweeks, evidence is mounting that working less can actually lead to working more effectively. Across the globe, countries and organizations that have adopted the 4-day workweek are reporting impressive results, while the traditional 5-day workweek is being scrutinized for its inefficiency and negative impact on worker well-being.

Google Unveils Quantum Computing Chip That Could Revolutionize AI and Drug Discovery

In a stunning breakthrough, Google has unveiled a revolutionary quantum computing chip named Willow, capable of performing tasks that would otherwise take conventional computers billions of years to complete. At just 4cm² in size, Willow could reshape industries ranging from drug discovery to artificial intelligence (AI), significantly accelerating the pace of scientific and technological advancements.

Nvidia Under Investigation by China for Suspected Anti-Monopoly Violations Amid U.S. Chip Crackdown

In the latest twist in the ongoing global tech tensions, Beijing announced on December 9, 2024, that it was investigating Nvidia for potential violations of China’s anti-monopoly law. This investigation is widely seen as a response to the recent actions taken by Washington against China’s semiconductor industry, particularly the U.S. chip curbs imposed on Chinese companies.

Crypto News: Ethereum Set to Break All-Time High as BlackRock Injects $500M – Bullish Momentum Builds

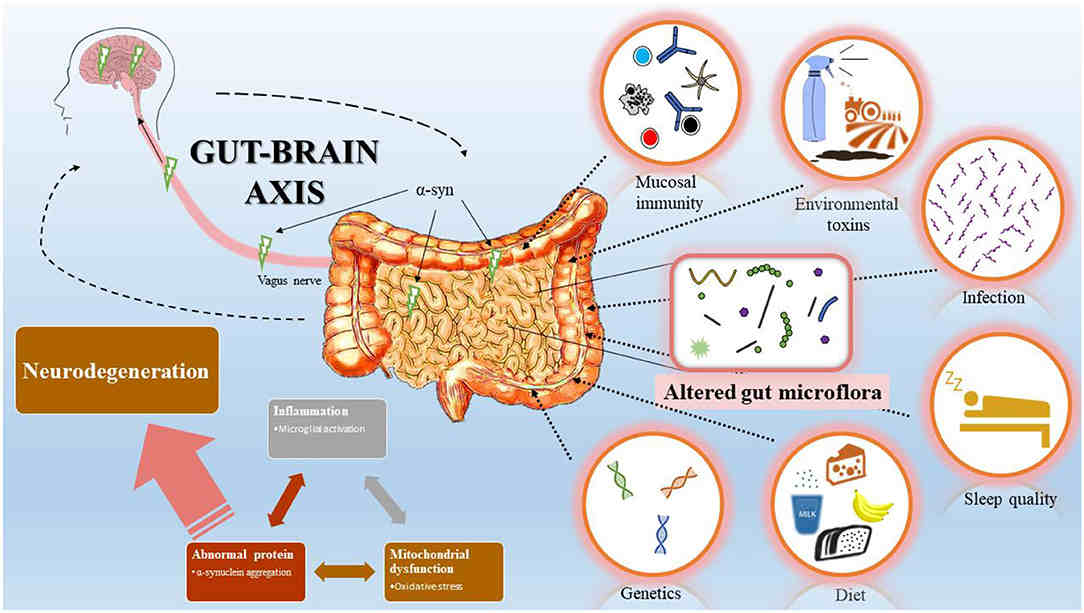

Unlocking the Secrets of the Second and Third Brain: Recent Findings on the Gut and Heart Neural Networks

The recent discoveries surrounding the second brain (the gut) and the third (the heart) are reshaping how we understand the relationship between our brain, body, and emotions. By recognizing the complexity of these neural networks, researchers and healthcare professionals are uncovering new approaches to treating mental and physical health that go beyond the traditional focus on the brain alone.

Understanding the 14th Amendment: History, Importance, and Political Challenges

John Bingham, a congressman from Ohio and a key architect of the 14th Amendment, played a pivotal role in shaping its provisions, especially its Equal Protection Clause and Due Process Clause. His work in crafting the 14th Amendment was deeply influenced by his commitment to protecting the civil rights of newly freed African Americans and ensuring equality under the law after the Civil War.

The Fall of the Al-Assad Regime: A Turning Point in Syrian History

Oil and Gas in Syria: The Hidden Forces Behind a Broken Industry

With proven reserves of approximately 2.5 billion barrels of oil and 8.5 trillion cubic feet of natural gas, Syria’s energy sector could play a crucial role in its long-term economic recovery. However, the road to recovery will be a challenging one, requiring both political stabilization and the rebuilding of critical infrastructure.

The Electric Vehicles Revolution: Breaking News and Key Developments in 2024

2024 has been a pivotal year for the EV industry, marked by significant technological innovations, market growth, and a series of milestones that signal an accelerated shift towards electric mobility. Below, we explore the key developments in the EV space from this year that highlight the ongoing transformation of transportation worldwide.

The Tragic Murder of Louise Smith: A Case of Deception and Betrayal

U.S. Police Concerned Over iPhones Secretly Rebooting to Block Access

Toxic Bosses Are a Global Issue With Devastating Consequences for Organizations And Employees

Toxic leaders are a widespread issue plaguing employees and organizations across various industries. A 2023 survey found that 87 per cent of professionals have had at least one toxic boss during their careers, with 30 per cent encountering more than one. Another survey found that 24 per cent of employees are currently working under the worst boss they’ve ever had.

Where There’s Smoke: The Rising Death Toll From Climate Charged Fire in the Landscape

Some People Love to Scare Themselves in an Already Scary World − Here’s the Psychology Of Why

Researchers found that people who visited a high-intensity haunted house as a controlled fear experience displayed less brain activity in response to stimuli and less anxiety post-exposure. This finding suggests that exposing yourself to horror films, scary stories or suspenseful video games can actually calm you afterward.

What 12 Ancient Skeletons Discovered in a Mysterious Tomb in Petra Could Tell Us About the Ancient City

Twelve skeletons have been found in a large, 2,000-year-old tomb directly in front of the Khazneh (“Treasury”) in the city of Petra in Jordan. Alongside them, excavators have discovered grave goods made of pottery, bronze, iron and ceramics. There is much excitement among archaeologists because of what the rare opportunity to investigate this site might tell us about Petra’s ancient people, the Nabataeans, and their culture.

Airdropping Vaccines to Eliminate Canine Rabies in Texas – Two Scientists Explain the Decades of Research Behind Its Success

How to Prepare a Lizard For Cooking

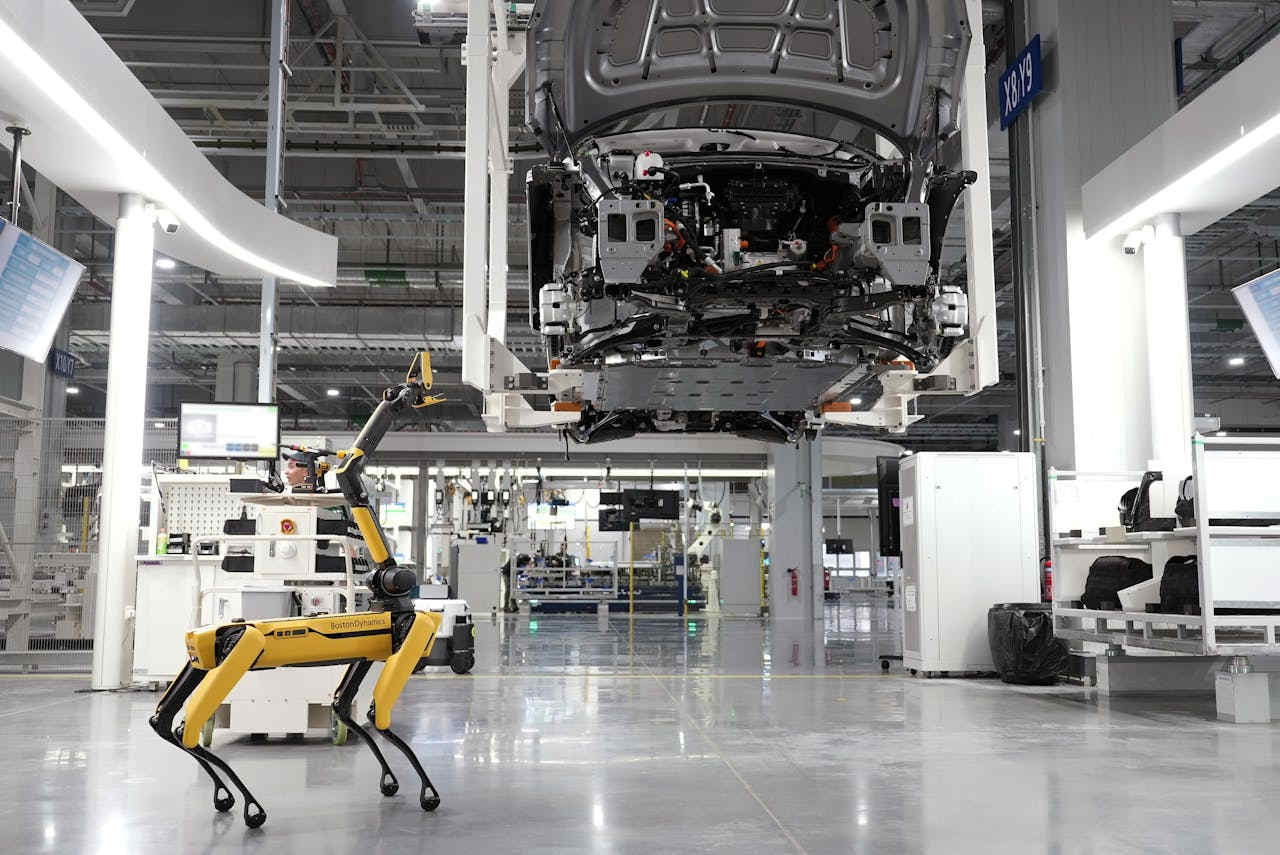

New Forms of Steel for Stronger, Lighter Cars

Automakers are tweaking production processes to create a slew of new steels with just the right properties, allowing them to build cars that are both safer and more fuel-efficient. Such materials can reduce the weight of a vehicle by hundreds of pounds — and every pound of excess weight that is shed saves roughly $3 in fuel costs over the lifetime of the car.